The top five benefits of implementing

edge AI in your next project

The future of smart technology is at the edge

Edge AI transforms artificial intelligence by moving computation and decision-making from centralized cloud servers to the very edge of the network - right where data is generated. Instead of sending information to cloud servers for processing, edge AI enables devices to analyze and act on data locally.

This shift brings significant benefits, including faster response times, greater efficiency, enhanced privacy, and improved reliability. As a result, edge AI is becoming essential across a wide range of applications. According to ReThink Technology Research, by early next decade, 74% of data will be processed outside traditional data centers.

The value of edge AI

1. Reduced latency for when microseconds matter

Edge AI delivers real-time processing by handling data directly on the device, eliminating the delays of sending information to the cloud. This immediate response is essential for time-sensitive applications, such as autonomous vehicles and industrial automation where even milliseconds can make a difference.

2. Lower bandwidth and cloud costs

Edge AI minimizes data transfer to the cloud, reducing network load and lowering operational costs associated with cloud storage and processing.

3. Sustainable energy usage

With low data and power consumption, it is an environmentally friendly option that aligns with modern energy conservation goals. In addition, edge AI can be deployed to enhance energy management and power efficiency within the application itself.

4. Improved reliability and resilience

Edge devices can operate independently of network connectivity, ensuring continuous AI functionality even in remote or disconnected environments.

5. Enhanced data privacy

By keeping sensitive data on-device rather than transmitting it over networks, edge AI significantly reduces the risks associated with data breaches and ensures better compliance with privacy regulations.

1. Reduced latency for when microseconds matter

Edge AI delivers real-time processing by handling data directly on the device, eliminating the delays of sending information to the cloud. This immediate response is essential for time-sensitive applications, such as autonomous vehicles and industrial automation where even milliseconds can make a difference.

2. Lower bandwidth and cloud costs

Edge AI minimizes data transfer to the cloud, reducing network load and lowering operational costs associated with cloud storage and processing.

3. Sustainable energy usage

With low data and power consumption, it is an environmentally friendly option that aligns with modern energy conservation goals. In addition, edge AI can be deployed to enhance energy management and power efficiency within the application itself.

4. Improved reliability and resilience

Edge devices can operate independently of network connectivity, ensuring continuous AI functionality even in remote or disconnected environments.

5. Enhanced data privacy

By keeping sensitive data on-device rather than transmitting it over networks, edge AI significantly reduces the risks associated with data breaches and ensures better compliance with privacy regulations.

How edge AI works

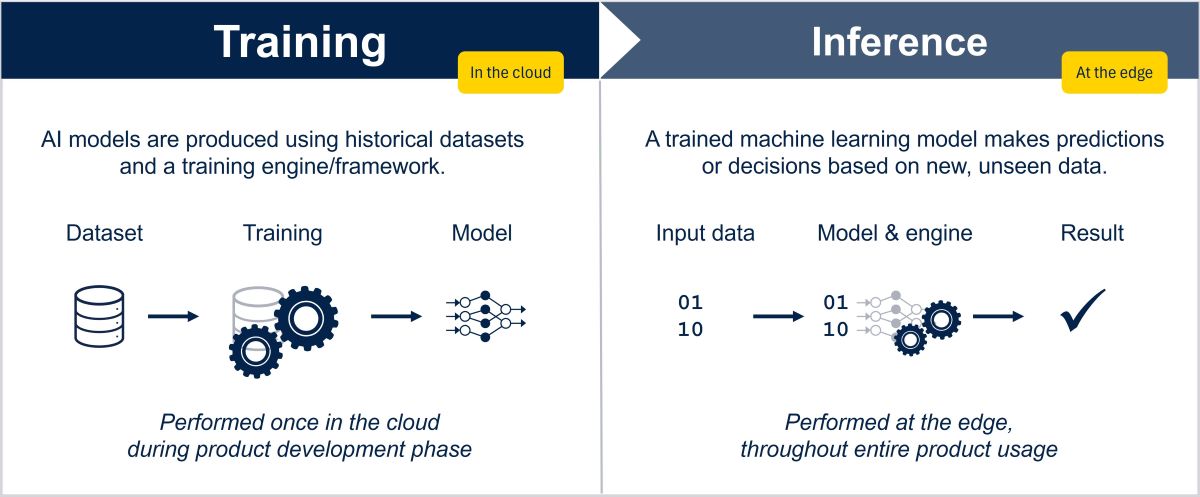

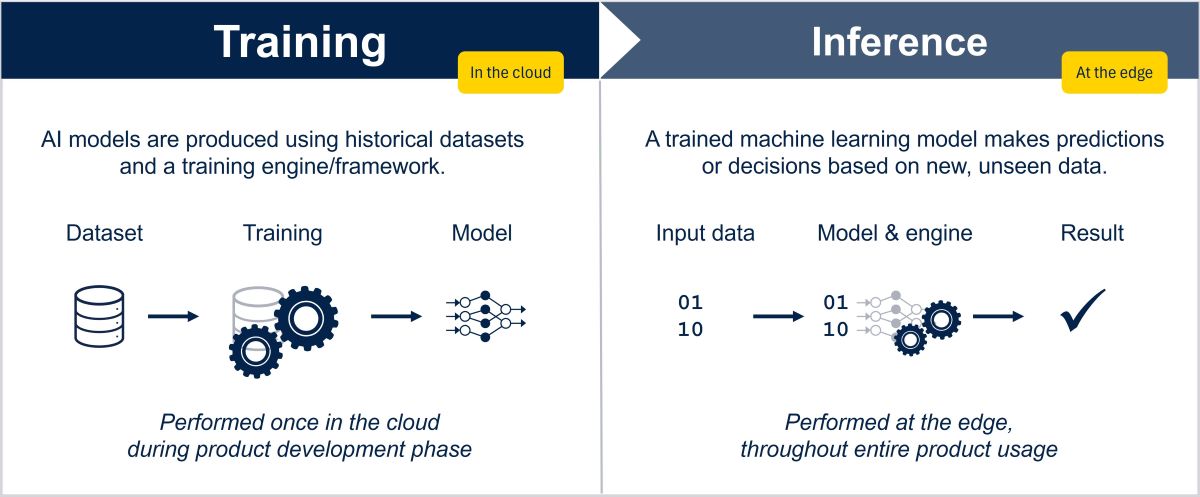

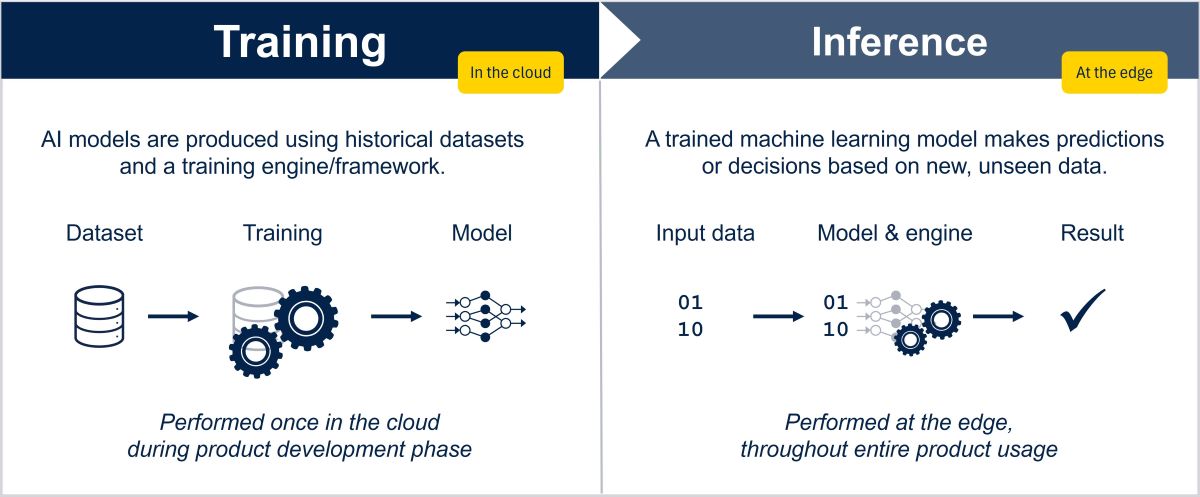

Implementing AI algorithms typically involves two key phases: training and inference. During training, historical data is used to build and optimize a model. In the inference phase, this trained model is deployed to generate predictions or make decisions based on new, incoming data.

Both phases encompass a series of steps, from data acquisition and model development to hardware integration, deployment, as well as an ongoing maintenance to ensure the solution remains accurate and effective over time.

Deploying edge AI in real-world applications is a collaborative effort

Data scientists design and optimize AI models using real-world data to maximize accuracy and efficiency for edge deployment. Embedded systems engineers then integrate these models into resource-constrained devices, adapting firmware, optimizing real-time performance, and ensuring reliable operation with minimal power consumption. Together, they iterate through testing and refinement, bridging advanced AI innovation with intelligent, efficient edge solutions.

Four enablers to help developers overcome the challenges of edge AI deployment

ST has been investing in edge AI research, innovation, and development activities for over a decade. We provide a holistic approach to address the main challenges developers face in edge AI by:

- simplifying the deployment of AI on resource-constrained devices

- providing tools and models that accelerate development and reduce time-to-market

- supporting a wide range of applications, from anomaly detection to advanced computer vision, audio processing, and speech recognition

Our goal is to help all developers overcome the complexity of deploying edge AI by focusing on four main enablers.

1. Scalable hardware platforms

ST offers a wide range of hardware, from smart sensors to general-purpose STM32 microcontrollers with or without AI-acceleration, and SPC5 and Stellar automotive microcontrollers, which can all run edge AI workloads.

This scalable hardware offer makes it possible to cover a wide range of applications, from time-series data analysis (anomaly detection, activity recognition) to advanced use cases such as computer vision or speech recognition, all running locally at the edge.

2. User-friendly software tools enabling hundreds of thousands of projects

ST’s software ecosystem is designed to reduce complexity, accelerate development, and ensure that both embedded engineers and data scientists can efficiently embed edge AI into their solutions. ST edge AI tools currently support more than 160,000 projects yearly. Embedded engineers benefit from simplified AutoML tools for streamlined model optimization, benchmarking, and deployment. Data scientists can leverage advanced model optimization and conversion tools to fine-tune and adapt their models for a variety of platforms.

3. A vibrant developer community

We support a global network where developers can share knowledge, access resources, and collaborate on innovative edge AI projects. This edge AI community accelerates learning and helps drive new ideas into real-world solutions. Find out more on the ST Community.

4. A global network of partners

ST and Authorized Partners deliver ready-to-use solutions and speed up customer application development. These partnerships ensure that customers have access to proven technologies and the latest advancements in hardware and software, tailored to their specific application needs, along with expert, flexible support throughout their edge AI journey.

Frequently asked questions

Edge AI can run on a wide range of devices, from smart sensors and ultralow power microcontrollers to more powerful edge gateways, smartphones, and industrial PCs. The combination depends on the application’s complexity, power budget, and performance requirements.

Edge AI processes data directly on the device, offering reduced latency for real-time processing, lower bandwidth and cloud costs, sustainable energy usage, improved reliability and resilience, and enhanced data privacy and security by keeping sensitive data on-device.

Tiny edge AI is a subset of edge AI running on highly resource-constrained devices, enabling machine learning inference on tiny embedded systems. Edge AI is a broader concept encompassing AI execution on a wide range of edge devices with varying capabilities, from tiny microcontrollers to application processors.

ST offers a range of hardware platforms supporting edge AI, including all the STM32 MCUs and MPUs, Stellar and SPC5 automotive microcontrollers, and smart MEMS sensors with a machine learning core or an intelligent sensing processing unit. These enable a variety of edge AI workloads, from time-series analysis to computer vision.

Since data is processed locally on the device, sensitive information does not need to be transmitted over the network or stored externally. This reduces the risk of interception or unauthorized access. By minimizing reliance on cloud connectivity, edge AI systems are less vulnerable to network-based attacks. Additionally, processing data locally enables faster detection and response to security threats, enhancing overall system resilience and privacy.

Yes. Cloud AI is typically used for complex tasks and for analyzing large volumes of data over long periods, providing aggregated insights. Edge AI, in contrast, handles real-time inference and short-term data analysis locally on devices, enabling faster response times and lower latency. A hybrid approach combining both cloud and edge AI is common, optimizing performance, cost, and security by leveraging the strengths of each.

Before exploring hardware or software possibilities, the very first step is to clearly define the specific use case for edge AI. This involves identifying one or more data sources, the AI functionality that is required, as well as the constraints and the performance goals. This foundational understanding guides all subsequent decisions, from selecting the right hardware platform to choosing or designing AI models optimized for the edge.

The ST Edge AI Suite offers a useful hub for developers to find case studies, tools, and resources to define their use case and find the right tools to get started.