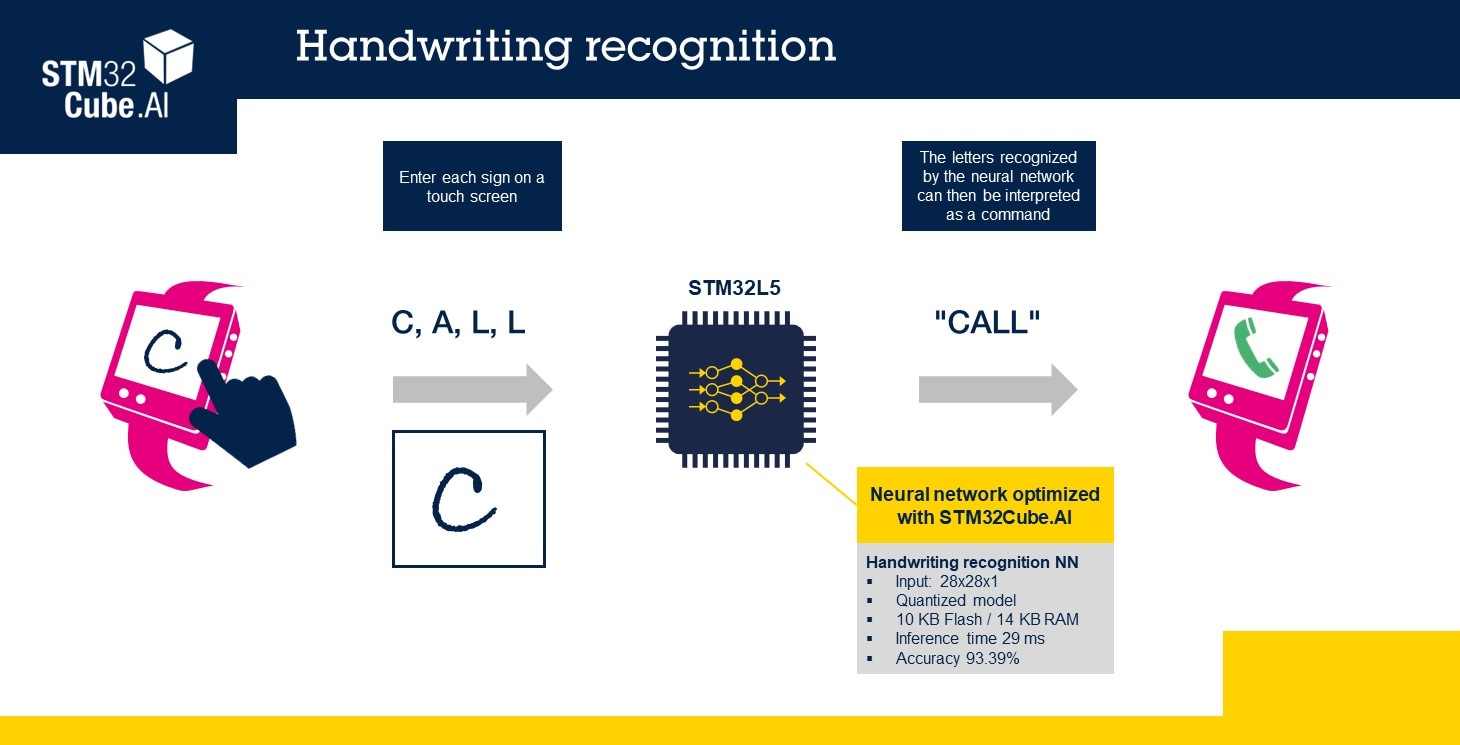

Approach

- The touch screen is captured as an image to be classified by the neural network (NN)

- Each character or letter is recognized as a composition of specific commands

- The demo runs on the STM32L562E discovery kit with an NN inference time for each character

The model can be re-trained thanks to the STM32 Model Zoo.

Sensor

The model zoo allows users to test the camera module bundle (reference: B-CAMS-OMV).

Data

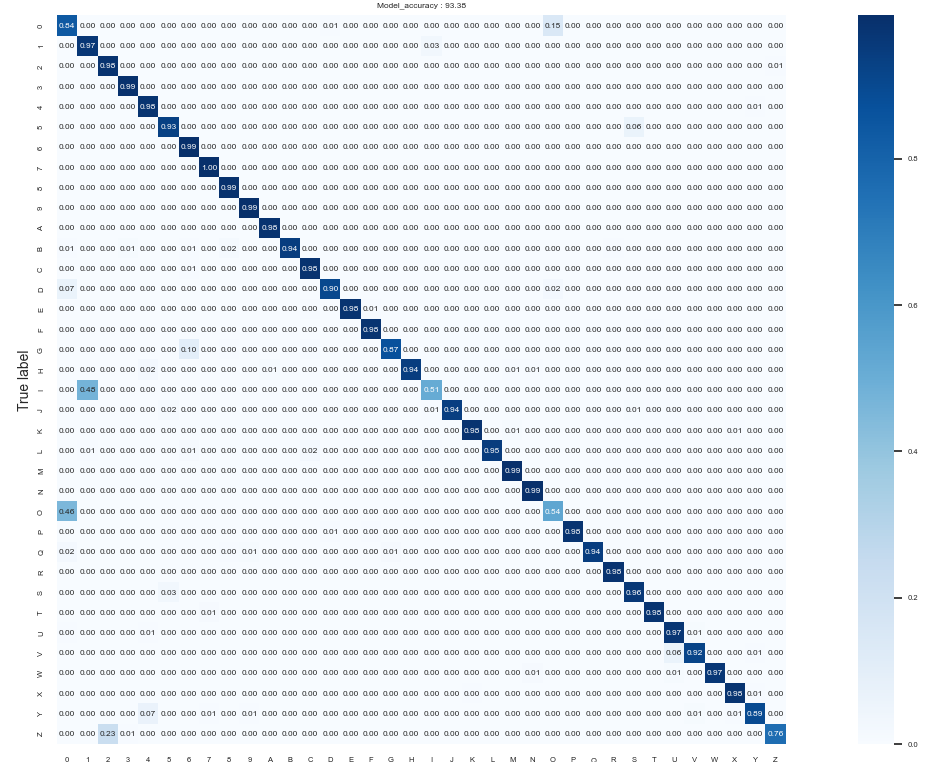

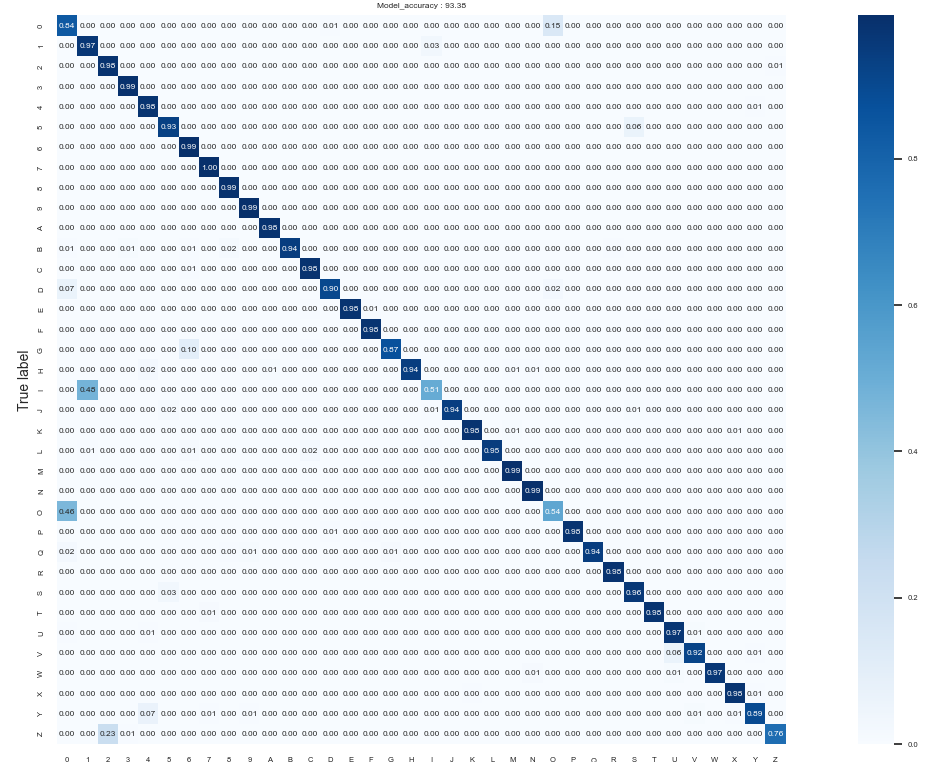

In STM32 model zoo, the model is trained on a subset version of the EMNIST dataset.

In this experiment, only the ten-digit classes [0;9] and the capital letters of the alphabet [A-Z] were kept from the MatLab version of the EMNIST ByClass dataset.

For the demo, the dataset was enriched with images captured from the touch screen on the ST board.

Data format

The dataset is made of:

- uppercase letters from A to Z

- digits from 0 to 9

The dataset contains 28 x 28 pixels of grayscale images organized in 36 balanced classes.

Results

Model ST MNIST

Input size: 28x28x1

Memory footprint:

Float model:

38 Kbytes of flash memory for weights

30 Kbytes ofRAM for activations

Quantized model:

10 Kbytes of flash memory for weights

14 Kbytes ofRAM for activations

Accuracy:

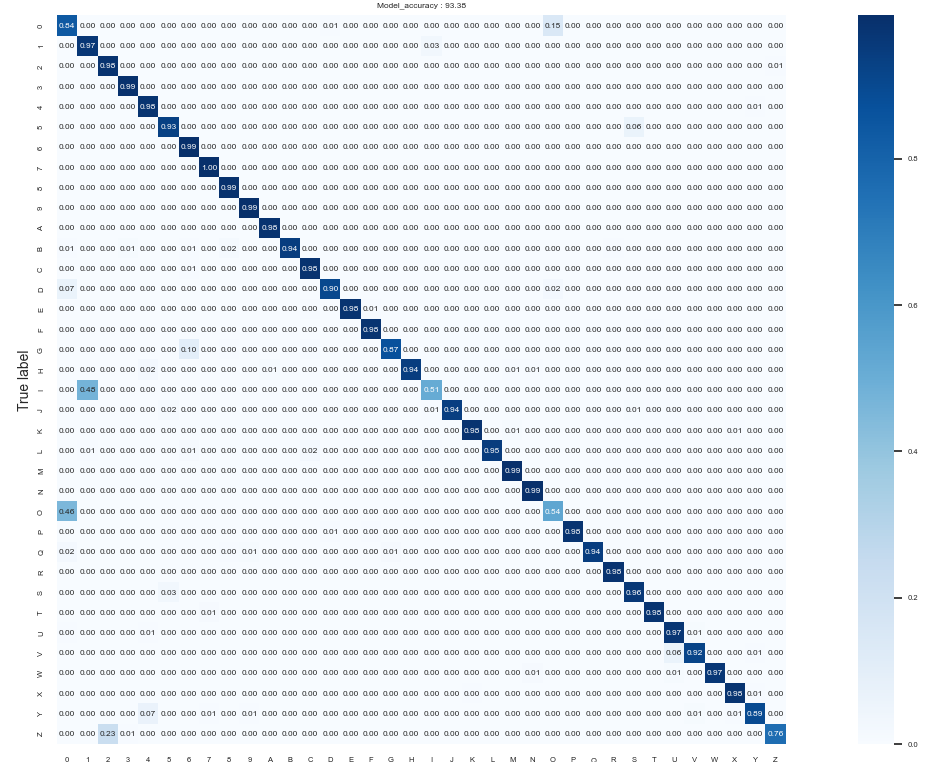

Float model: 93.48%

Quantized model: 93.39%

Performance on STM32L562E @ 110 MHz

Float model:

Inference time: 83 ms

Frame rate: 12 fps

Quantized model:

Inference time: 29 ms

Frame rate: 34 fps

Confusion matrix

Resources

Model repository STM32 MODEL ZOO

A collection of reference AI models optimized to run on ST devices with associated deployment scripts. The model zoo is a valuable resource to add edge AI capabilities to embedded applications.

A free STM32Cube expansion package, X-CUBE-AI allows developers to convert pretrained AI algorithms automatically, such as neural network and machine learning models, into optimized C code for STM32.

Compatible with STM32L4, L5, U5, H7 series

The STM32 family of 32-bit microcontrollers based on the Arm Cortex®-M processor is designed to offer new degrees of freedom to MCU users. It offers products combining very high performance, real-time capabilities, digital signal processing, low-power / low-voltage operation, and connectivity, while maintaining full integration and ease of development.