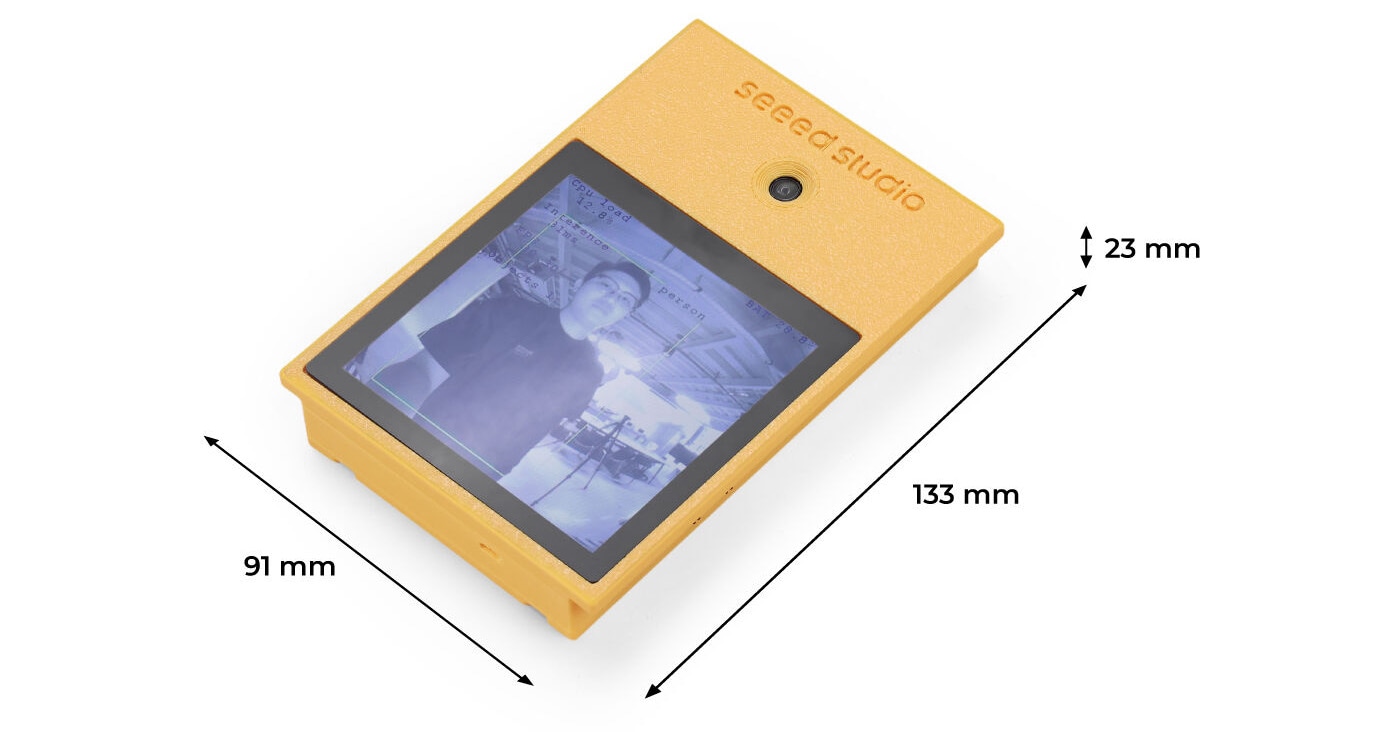

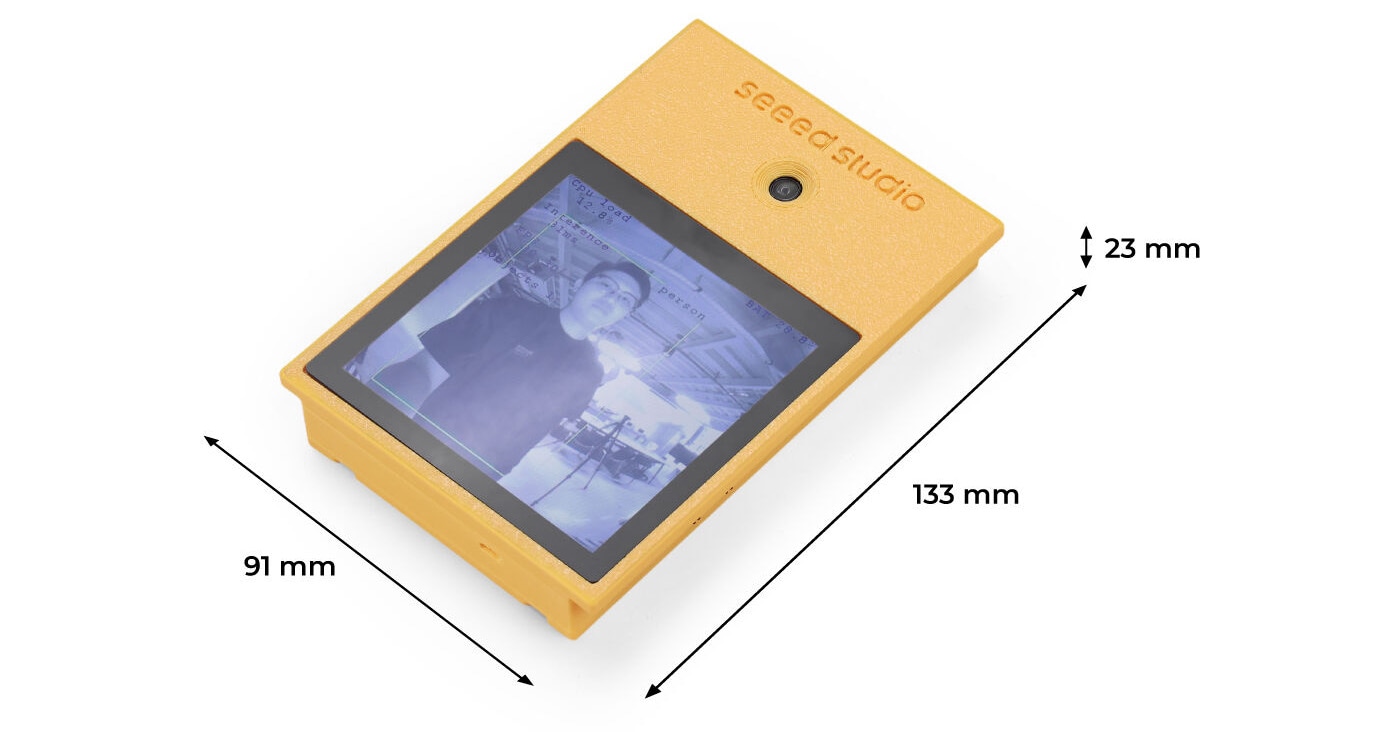

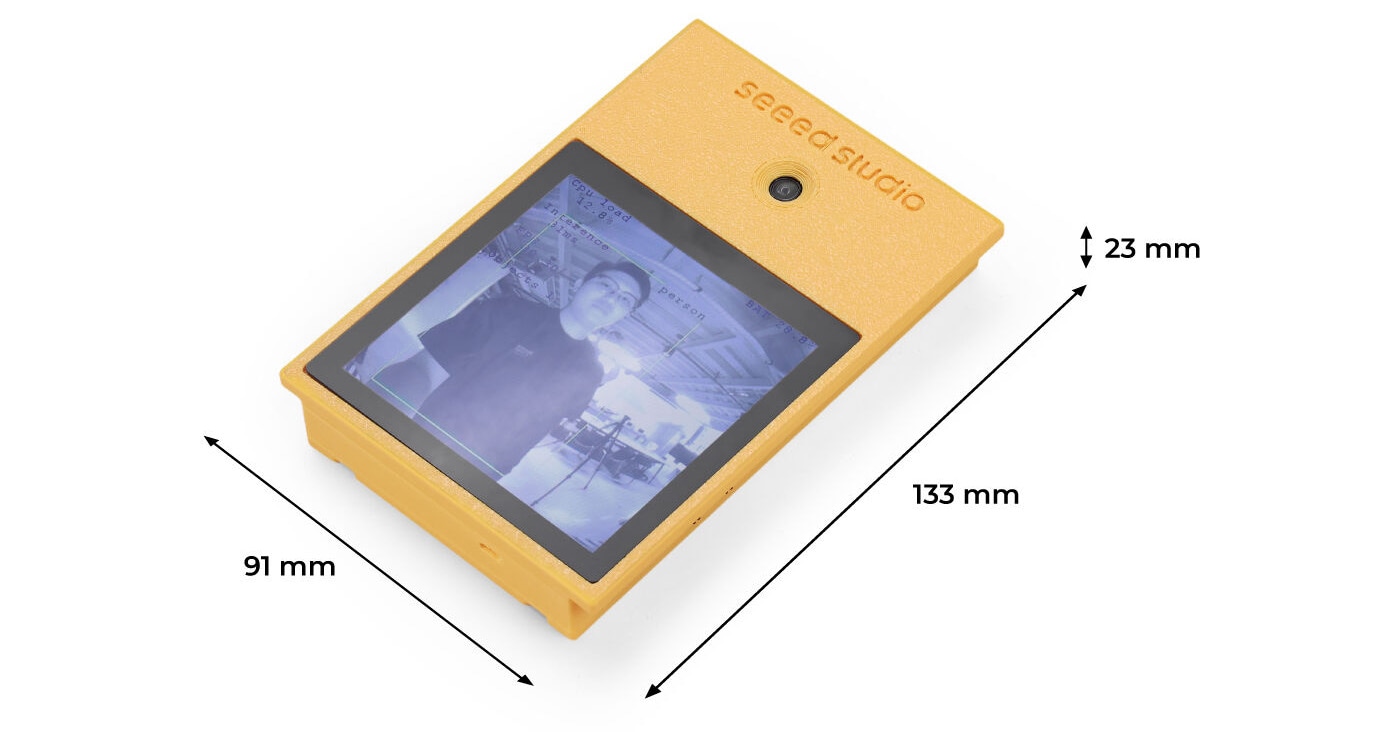

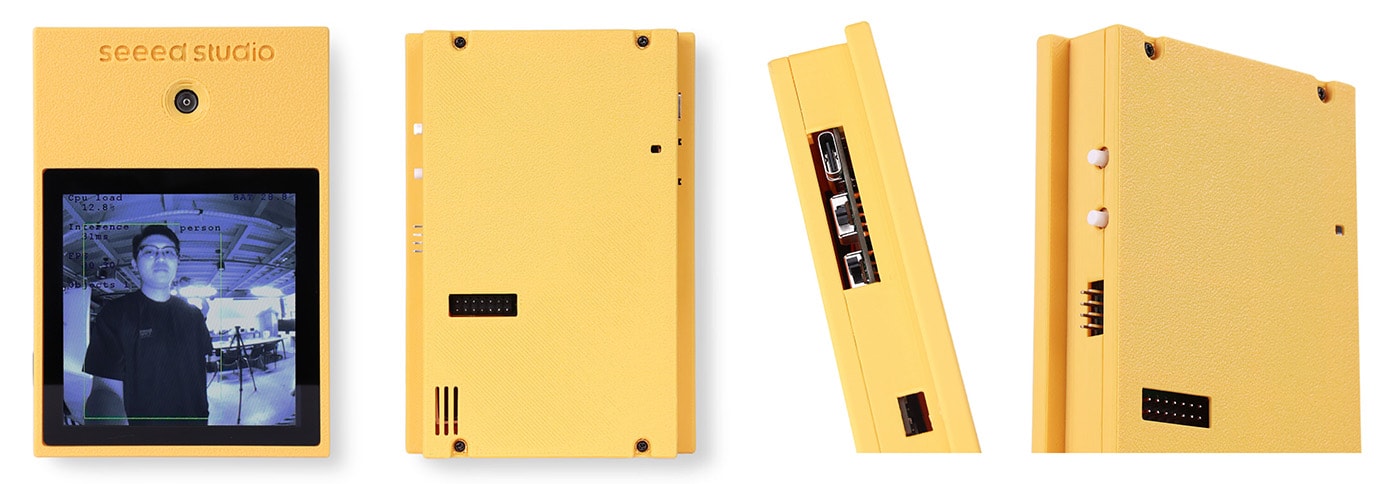

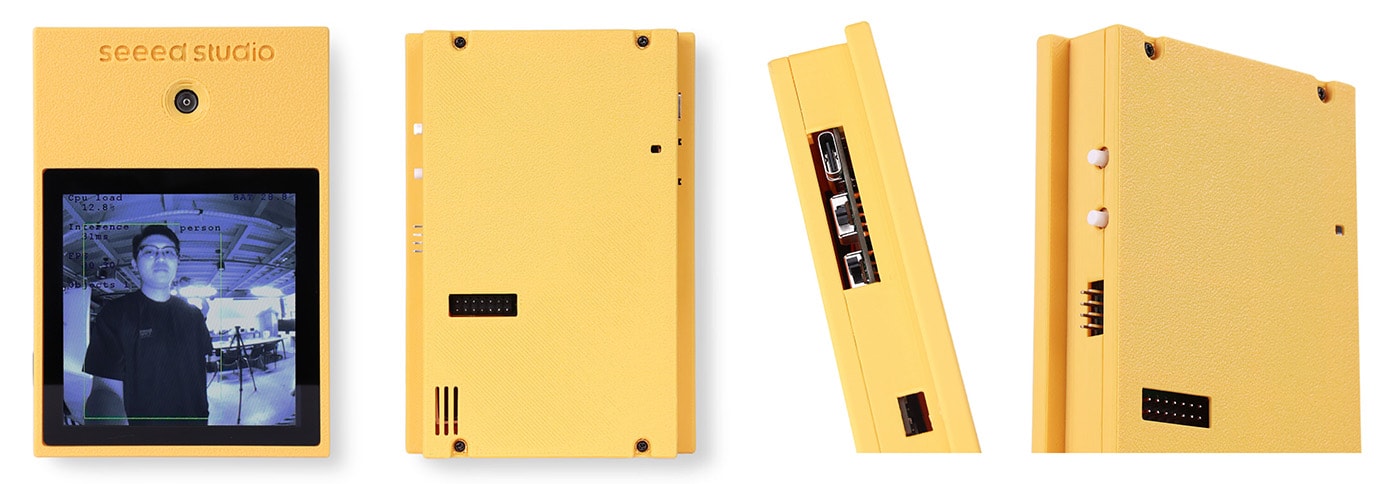

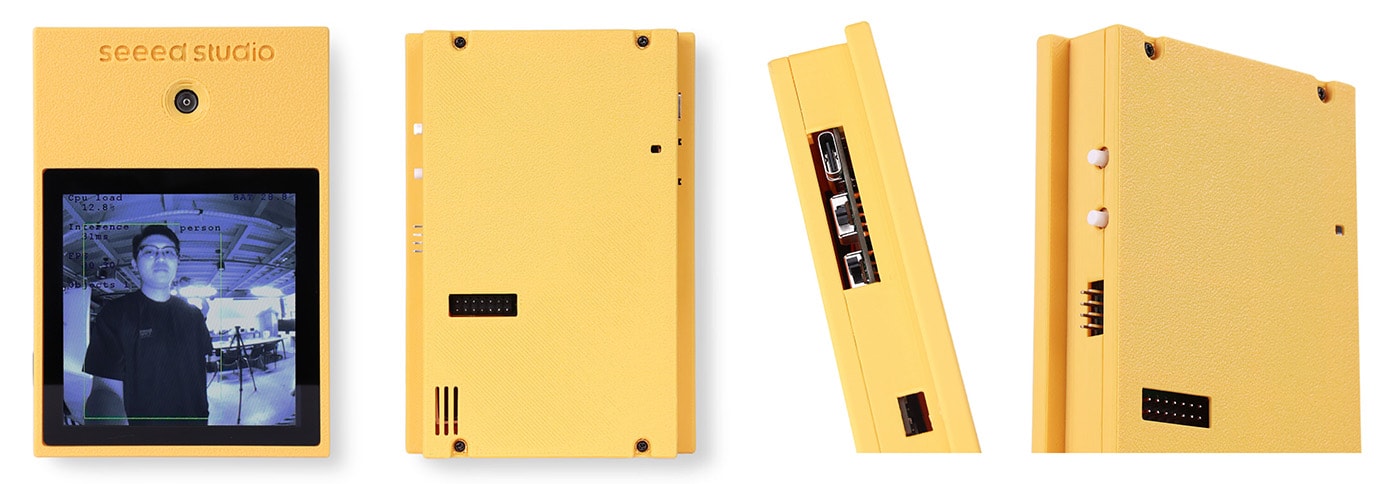

Handheld development platform for real-time vision, motion, and voice at the edge

Handheld smart devices like AI assistants are shifting from phones and cloud backends to everyday objects (appliances, kiosks, wearables, and machines), where instant responses, privacy, and low power matter most. To keep pace, teams need a fast way to prototype and iterate new assistant behaviors (try a detector today, add gesture and voice tomorrow) without spinning custom hardware or wiring complex Linux stacks.

Approach

The ambition is to offer an open, ready‑to‑program development platform that brings vision, audio, and motion sensing together on a single STM32N6 board so teams can compose truly multimodal AI assistants without external add‑ons or cloud dependence. By unifying a global‑shutter camera, an IMU with on‑sensor processing, a microphone/audio path, touchscreen UI, and Wi‑Fi, the kit lets developers experiment and iterate rapidly, from a visual detector to gesture control to voice interaction, using the same hardware and firmware base.

These capabilities unlock a wide range of real‑world deployments where instant, private, and energy‑efficient interactions are essential.

Application overview

A compact, on‑device pipeline turns raw sensor signals into real‑time assistant actions on the STM32N6 platform, keeping data local and latency predictable for a smooth user experience.

1 - Capture

The camera, IMU, and microphone collect synchronized vision, motion, and audio streams for multimodal processing at the edge.

2 - Preprocess

Internal image signal processor (DCMIPP) resizes/crops frames to the network input while the ISPU extracts motion features and the audio path frames samples for inference.

3 - Inference

Quantized models compiled with STM32Cube.AI run on the Neural‑ART NPU; the Cortex‑M55 performs lightweight post‑processing such as YOLO decoding.

4 - Decision and UX

Results drive on‑device actions, UI overlays on the 480×480 touchscreen, and optional Wi‑Fi event publishing—no cloud round‑trips required.

5 - Iterate and deploy

Update models and classes, flash signed images, and scale the same application to production hardware using the STM32 toolchain.

Sensors

- VD55G1 800x700 global‑shutter camera providing crisp, distortion-free images of fast-moving objects.

- LSM6DSO16IS IMU with a built-in Intelligent Sensor Processing Unit (ISPU), enabling ultra-low power gesture and activity recognition without waking the main processor (tap interaction and movement detection).

- LIS2MDL magnetometer heading context for orientation‑aware apps.

- MP34DT06JTR digital microphone + codec, on‑device voice/events for interactive UX.

Resources

Extensive developer resources, including hardware specs, quick‑start guides, flashing steps, sample apps, and model deployment instructions, are available on the AI Assistant wiki page to accelerate prototyping and productization.

Author: Seeed and STMicroelectronics | Last update: Oct, 2025

Optimized with STM32Cube.AI

A free STM32Cube expansion package, X-CUBE-AI allows developers to convert pretrained AI algorithms automatically, such as neural network and machine learning models, into optimized C code for STM32.

Most suitable for STM32N6 Series

The STM32 family of 32-bit microcontrollers based on the Arm Cortex®-M processor is designed to offer new degrees of freedom to MCU users. It offers products combining very high performance, real-time capabilities, digital signal processing, low-power / low-voltage operation, and connectivity, while maintaining full integration and ease of development.