The edge AI landscape:

understanding what’s next

The future of AI is distributed

Edge AI is paving the way for intelligence to be embedded in virtually every device, everywhere.

Across the technology landscape, there is a constant balancing act between power and performance at every layer of the system.

- At the top, cloud infrastructure delivers thousands of kilowatts of computational power for large-scale AI models and data processing.

- Closer to the edge, specialized hardware, such as neural processing units, handles moderate workloads efficiently.

- At the device level, billions of microcontrollers and sensors operate at extremely low power from a single watt down to microwatts. They perform AI inference right where data is generated, enabling real-time insights and responsiveness at the network’s edge.

By distributing AI workloads across the cloud, the edge, and tiny edge devices, this approach maximizes both performance and efficiency. It allows AI to scale seamlessly from massive data centers down to the tiniest smart sensors, unlocking new opportunities for innovation, agility, and sustainability in AI-powered solutions.

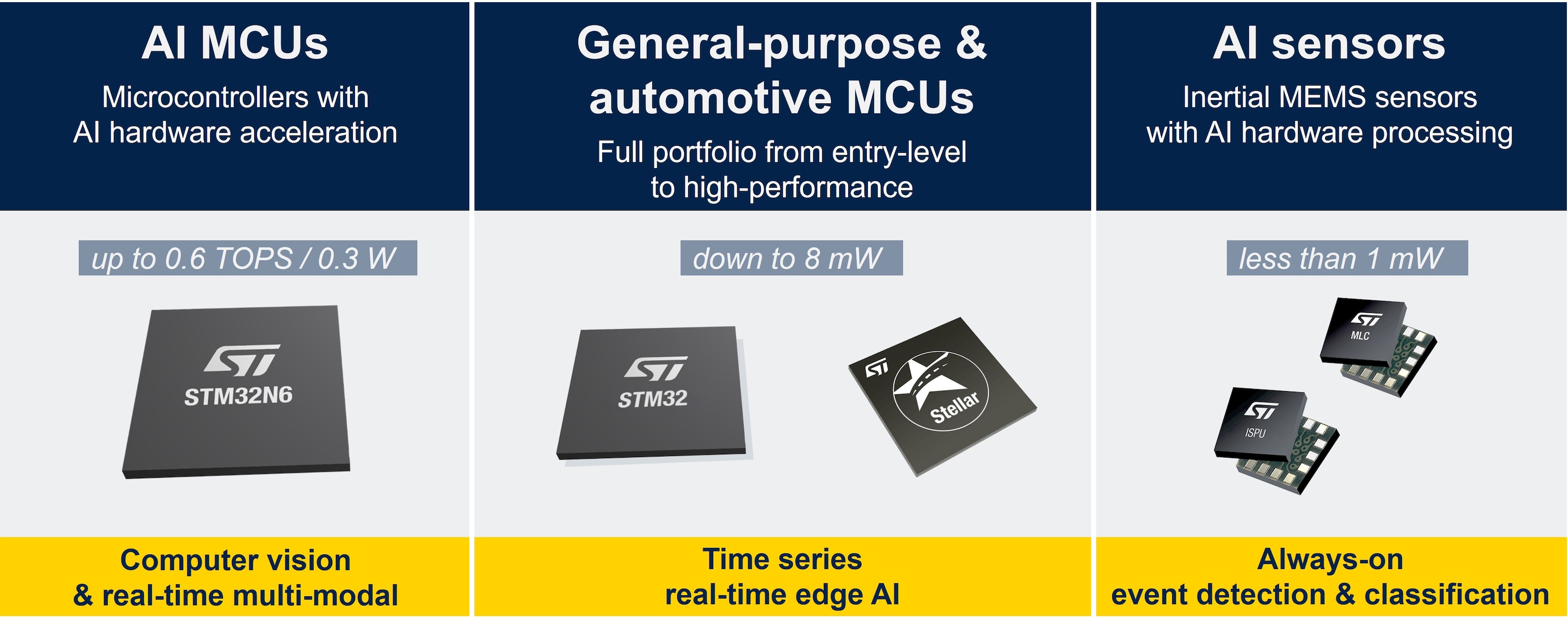

ST is leading tiny edge AI deployment

By processing data directly at the edge of the network, microcontrollers and smart sensors are the frontline of AI deployment.

They are the critical interface between the physical world and intelligent systems. They capture real-world data, analyze it through embedded AI algorithms, and initiate immediate actions or decisions.

STM32N6 microcontrollers, equipped with embedded AI acceleration in the form of the ST Neural-ART Accelerator, deliver impressive performance, reaching up to 600 giga operations per second (GOPS). With AI-accelerated microcontrollers, ST empowers embedded devices to handle computer vision and real-time multimodal processing.

Our general-purpose and automotive microcontrollers can run AI at low power levels, making them ideal for energy-sensitive environments. These solutions cover a wide range, from STM32 high-performance series down to ultra-low-power microcontrollers, as well as SPC5 and Stellar automotive MCUs.

We are also pushing the boundaries with intelligent sensors, consuming less than one milliwatt, enabling always-on event detection and real-time edge AI for time series data. This means devices can continuously monitor and respond to events while preserving battery life, thanks to technologies for in-sensor processing such as the machine learning core (MLC) and the intelligent sensor processing unit (ISPU).

How edge AI is transforming applications: three use cases

Tiny edge AI enables a wide range of embedded applications, driving innovation across diverse industries. Its impact can be understood through three key use cases, ranging from what is achievable today to what will become possible in the future.

1. Improving existing applications

Making an existing product or application simply better using existing hardware and software. In addition to their current operations, microcontrollers can run applications like anomaly detection, interpolation from sensor data, and many more.

This use case is software-enabled and does not require AI hardware acceleration in the microcontroller. Developers can upgrade their products without changing the hardware design or reduce costs, by replacing multiple sensors with a more advanced data processing unit.

2. Reducing costs

Enabling a product or application that is technically feasible today using a microprocessor or application processor, but delivered within the cost and power budget typical of a microcontroller.

For instance, people detection, sound analysis, and speech recognition can be embedded in existing applications and become more widespread thanks to hardware-accelerated microcontrollers or microprocessors that lower costs and reduce energy use.

3. Exploring the unknown

Developing brand-new applications never thought of before, as well as innovating in applications that run on the cloud today, moving them to the edge.

This approach could bring new features to the edge, such as pose and gesture estimation, biometric authentication, and object segmentation, allowing devices to understand and respond to human movements for enhanced user interaction.

Read more

The power of neural processing units in modern microcontrollers

Discover the pivotal role of NPUs in enhancing microcontroller capabilities.

Intelligent sensor processing unit: integrating brains into sensors

An innovative motion sensor solution for edge AI deployment.

High-g accelerometer meets the intelligent IMU

Accurate motion tracking and impact detection with lower system complexity.