Approach

The Edge AI Sensing Kit was initially introduced to highlight the capabilities of embedded vision intelligence, with fruit detection and counting as its first demonstration scenario. This use case underscores its relevance for smart retail, where accurate, real-time inventory tracking is critical. While the initial focus is on grocery environments, the underlying edge AI vision technology is broadly applicable—spanning smart homes, smart cities, room occupancy monitoring, and other context-aware automation systems.

Edge AI fundamentally changes this paradigm by bringing intelligence directly to the device. Real-time processing at the edge reduces reliance on external infrastructure, cuts operational costs, and enhances speed and efficiency. This decentralized approach also improves scalability, security, and accessibility, making vision-based applications viable across a wide range of industries.

- The Edge AI Sensing Platform Discovery Kit leverages the STM32N6 MCU to run a real-time object detection and counting model entirely on-device. Key benefits include:

- Low-latency performance (typically under 200 ms) for immediate, real-time inventory visibility

- Full edge processing, with no dependency on cloud servers

- Enhanced accuracy, with AI models trained to detect multiple product types under varying lighting and arrangement conditions

- Ultra-low power consumption, enabling continuous monitoring without straining energy budgets

- Reduced operational costs, by eliminating the need for cloud servers, recurring data costs, or additional tagging infrastructure

Application overview

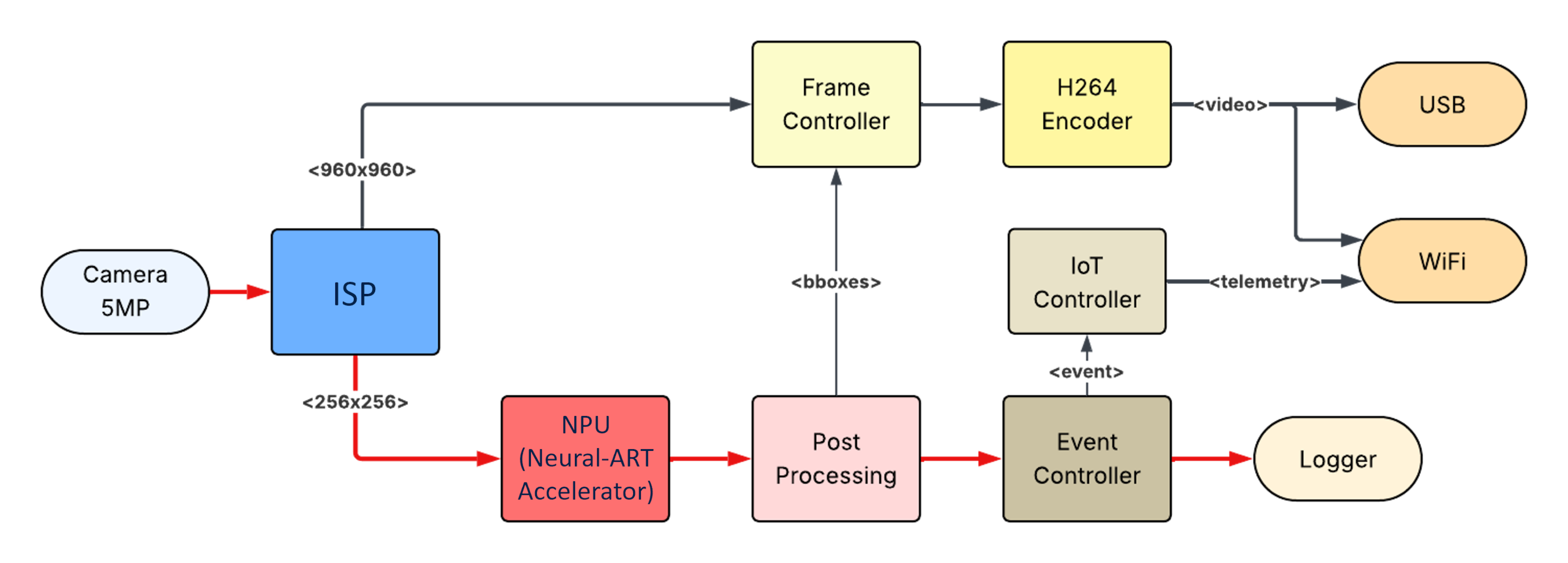

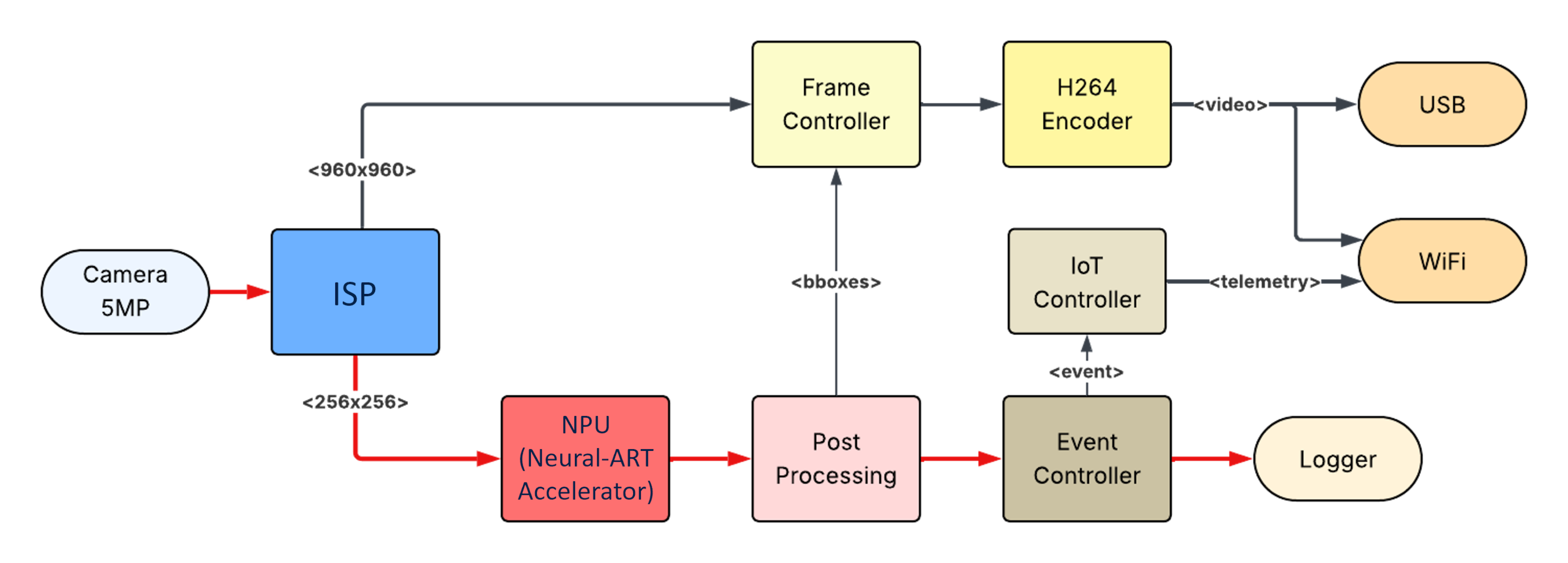

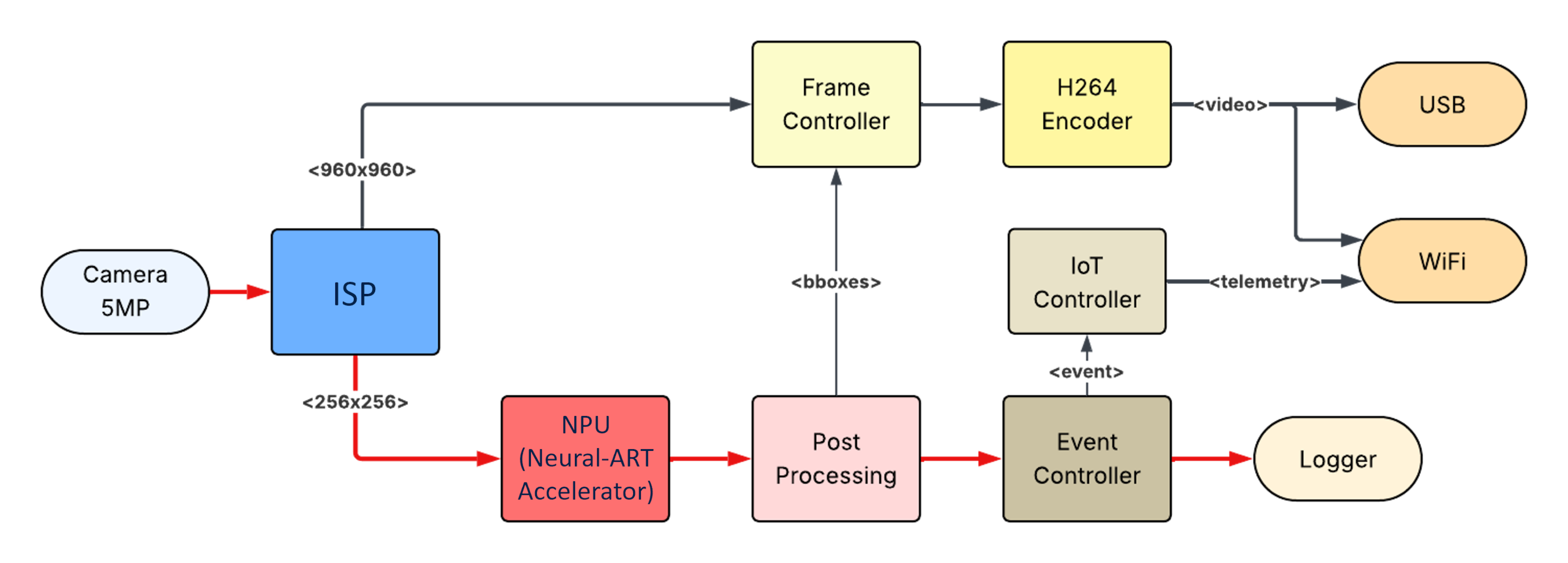

A 5MP camera captures high-resolution images, which are processed by the image signal processing (ISP) subsystem. A downscaled 256×256 frame is sent to the neural processing unit (Neural-ART Accelerator) for fruit and hand detection. Bounding boxes are post-processed to count fruits by type and detect interactions. Events are managed by the Event Controller and logged with timestamps. In parallel, the ISP provides a 960×960 frame for video encoding via the H.264 encoder, which are streamed over USB or Wi-Fi. An IoT controller handles telemetry for remote monitoring.

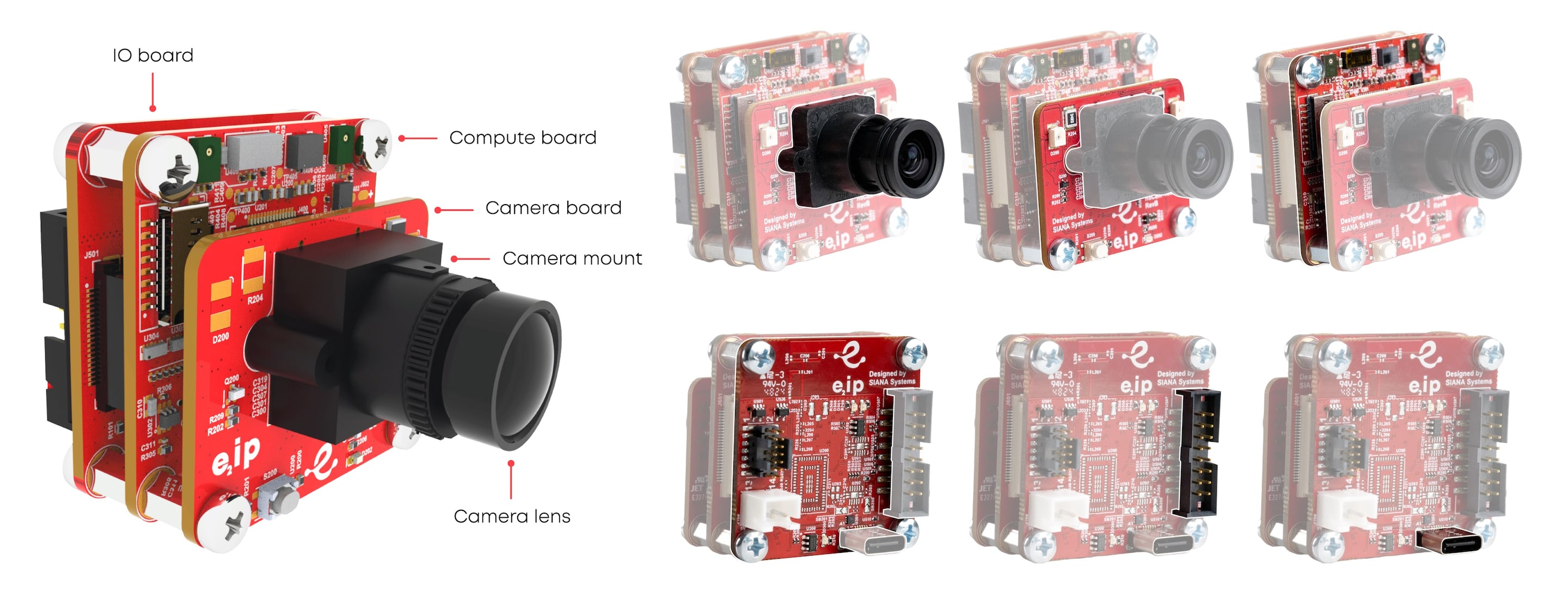

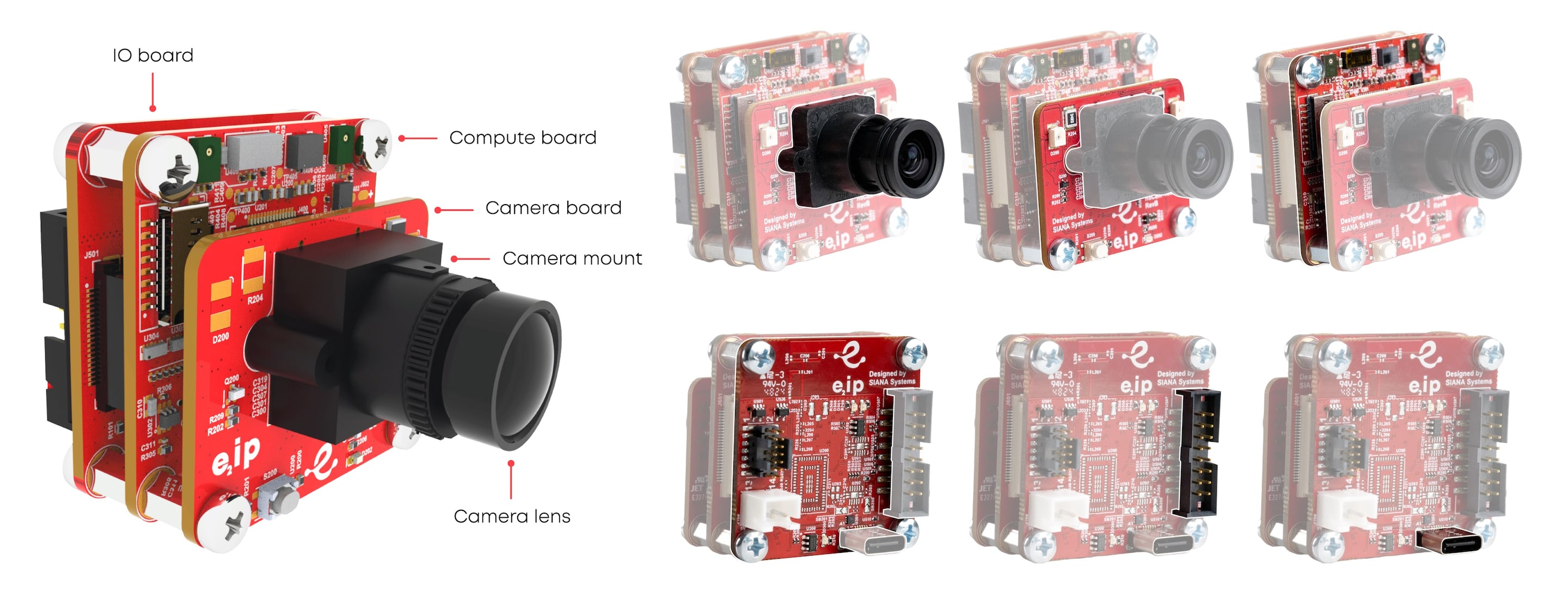

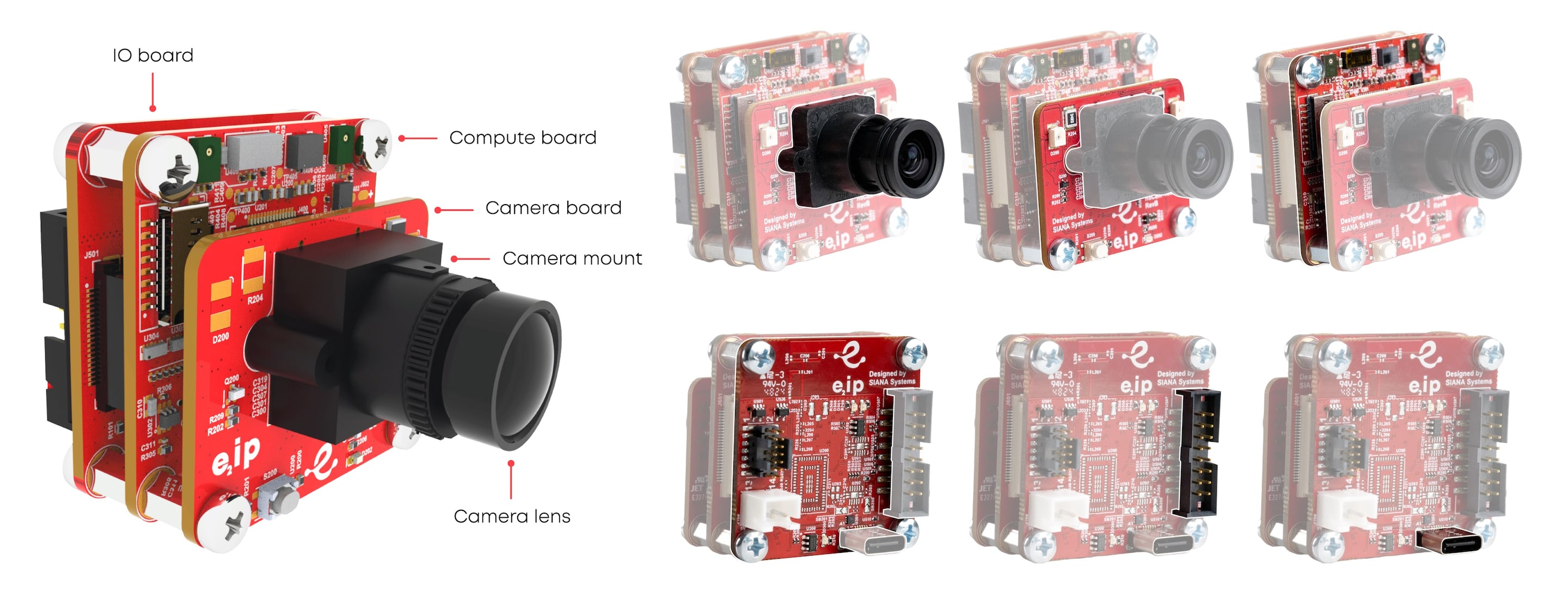

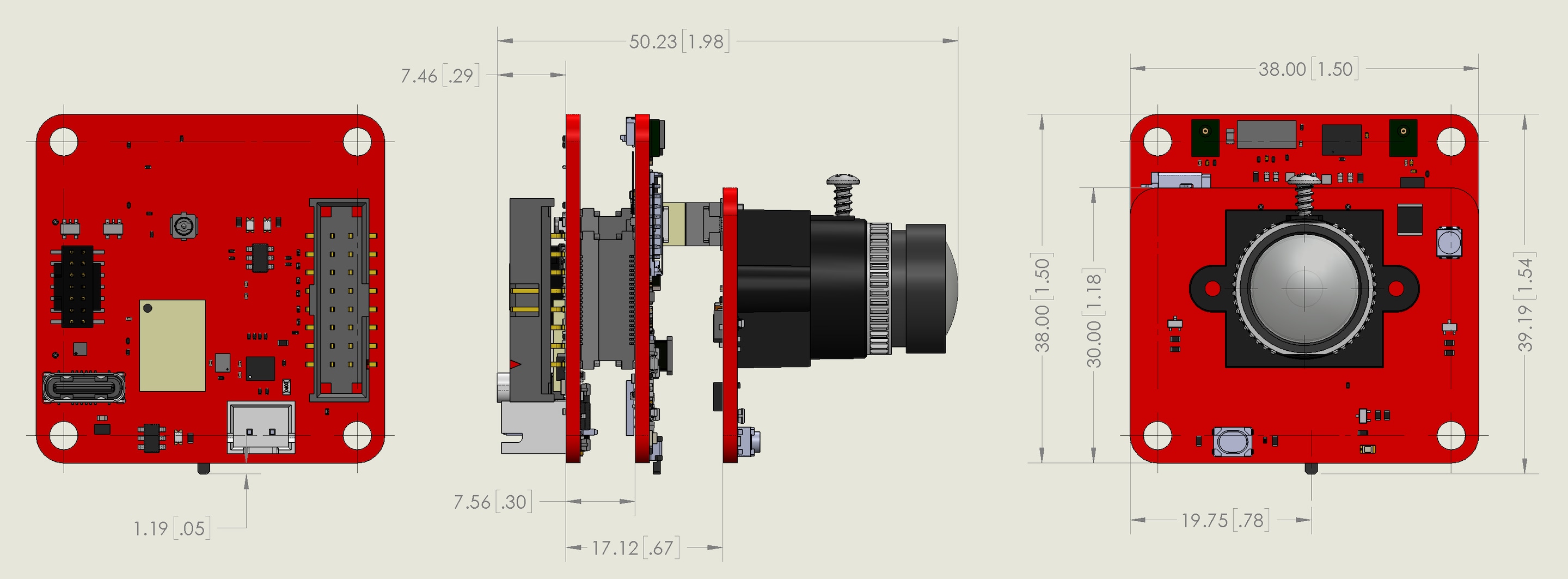

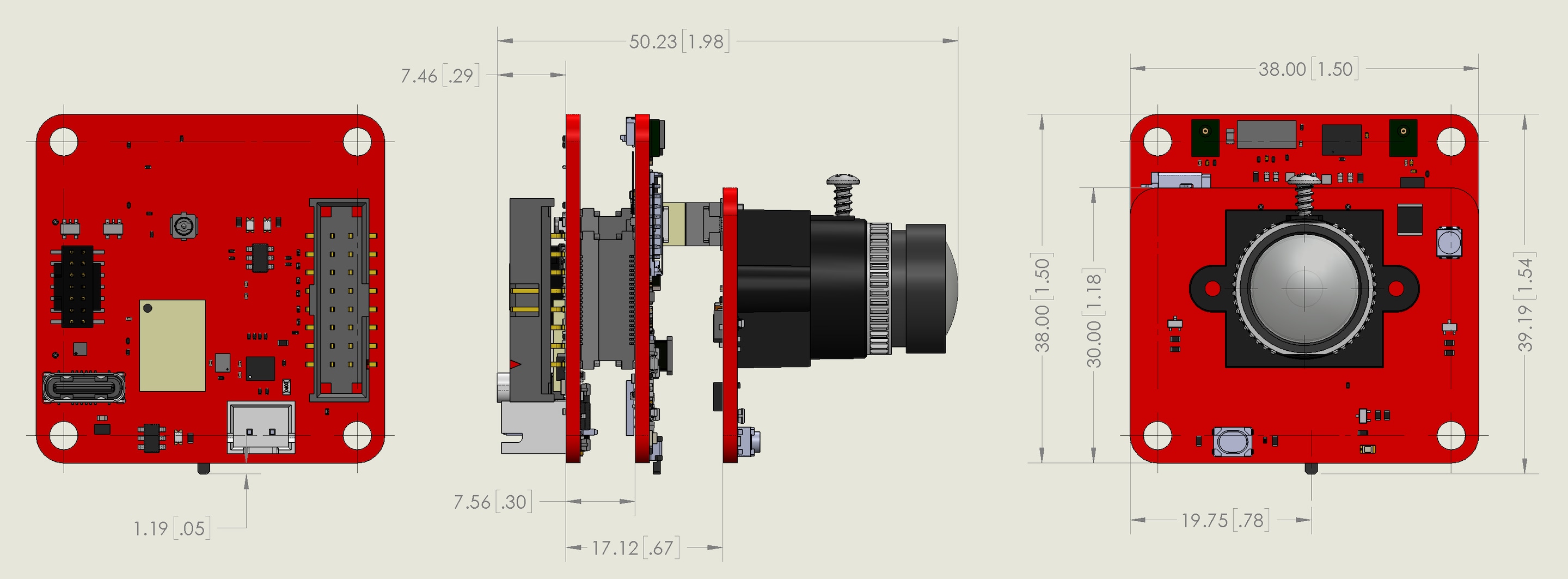

Sensor

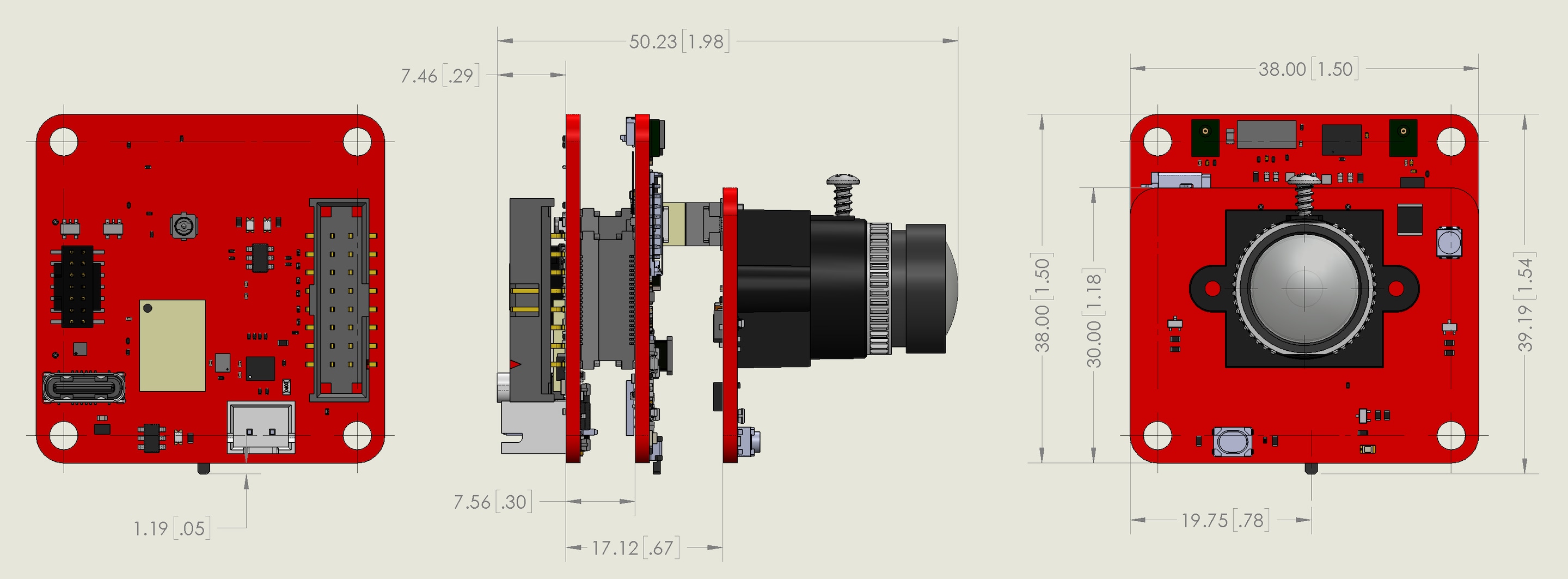

The sensor used in this mockup is the Sony IMX335 5MP RGB:

- Input: 2592x1944

- IPS resize: 960x960

- FPS: 15

E2ip will supply additional extension boards with various sensors to meet the specific requirements of the application.

Dataset and model

Dataset:

- Custom dataset (7 classes)

- 8k original images

- 115k augmented images

- 510k annotations

Model:

- YOLOv8-nano, provided by our partner Ultralytics.

- Input size: 256 x 256 x 3

- Trainable parameters: 3,031,321

- MACC: 6.73E+08

Results

Weights (Flash): 2.9 MB

Activations (RAM): 880 KB

Inference time: 33 ms

Inference per second: 30

Author: E2IP Technologies & Siana Systems | Last update: May, 2025

Optimized with STM32Cube.AI

A free STM32Cube expansion package, X-CUBE-AI allows developers to convert pretrained AI algorithms automatically, such as neural network and machine learning models, into optimized C code for STM32.

Most suitable for STM32N6 Series

The STM32 family of 32-bit microcontrollers based on the Arm Cortex®-M processor is designed to offer new degrees of freedom to MCU users. It offers products combining very high performance, real-time capabilities, digital signal processing, low-power / low-voltage operation, and connectivity, while maintaining full integration and ease of development.