Shifumi gesture recognition

Trigger actions on a PC using a Time-of-Flight sensor to classify hand movements. Recognition of 3 different classes.

Gesture-based device control can bring many benefits, providing either a better user experience or supporting touchless applications for sanitary reasons. For demonstration purposes, we have created 3 classes to distinguish several hand poses, but the model can be trained with any gestures providing a wide range of new features to the final user.

NanoEdge AI Studio supports the Time-of-Flight sensor, but this application can be addressed with other sensors, such as radar and more.

Approach

- We set the detection distance to 20 cm to reduce the influence of the background. Optional: binarizing the distance measured.

- We took 10 measures (frequency: 15Hz) and for each measure, we predicted a class. We then chose the class that appeared the most often.

- (Concatenating measures to create a longer signal is performed to study the evolution of a movement. Here, our goal was to classify a sign. No temporality is needed).

- We created a dataset with 3,000 records per class (rock, paper, scissors), avoiding empty measurement (no motion).

- Finally, we created an 'N-Class classification' model (3 classes) in NanoEdge AI Studio and tested it live on a NUCLEO-F401RE.

Sensor

Data

Signal length 64, successive 8x8 matrixes

Data rate 15 Hz

Results

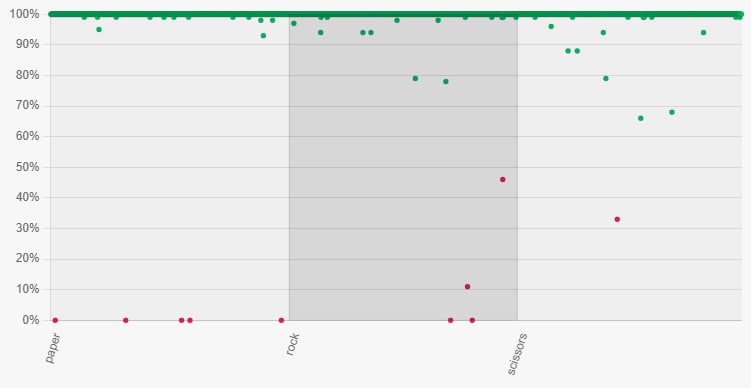

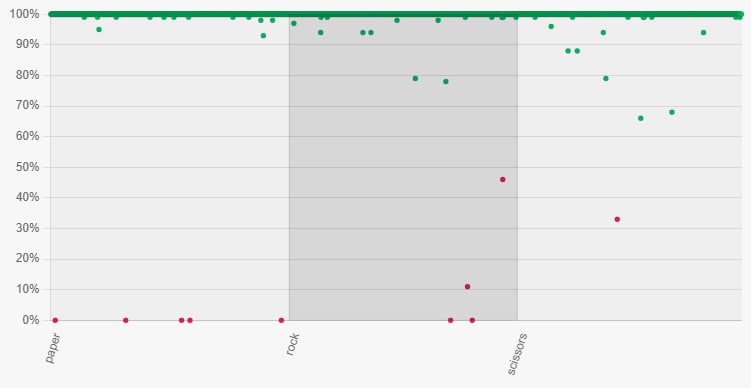

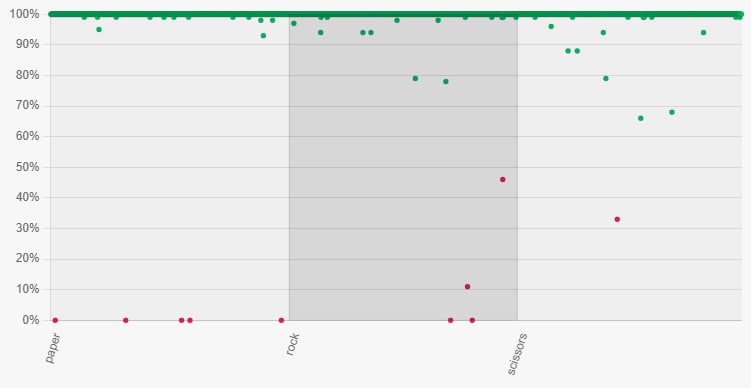

3 classes classification:

99.37% accuracy, 0.6 KB RAM, 192.2 KB Flash

Green points represent well classified signals. Red points represent misclassified signals. The classes are on the abscissa and the confidence of the prediction is on the ordinate

Resources

Model created with NanoEdge AI Studio

A free AutoML software for adding AI to embedded projects, guiding users step by step to easily find the optimal AI model for their requirements.

The STM32 family of 32-bit microcontrollers based on the Arm Cortex®-M processor is designed to offer new degrees of freedom to MCU users. It offers products combining very high performance, real-time capabilities, digital signal processing, low-power / low-voltage operation, and connectivity, while maintaining full integration and ease of development.